Deploy Any ML Models With FastAPI And Docker

Introduction

Machine Learning (ML) models are revolutionizing various industries, from healthcare to finance. These models, trained on massive datasets, can make predictions, identify patterns, and automate tasks. However, getting a trained ML model from development to production can be a challenge. Traditional deployment methods often involve complex infrastructure setup and environment management. This can lead to bottlenecks and hinder the accessibility of these powerful tools.

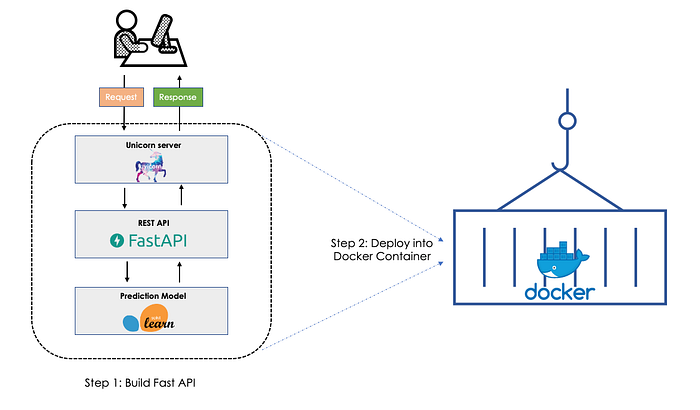

Here’s where FastAPI and Docker come in. FastAPI, a high-performance web framework for Python, simplifies building APIs for serving ML models. Docker, a containerization platform, packages the application with its dependencies into a lightweight, portable unit. Combining these two technologies allows for streamlined deployment of ML models, making them readily available for real-world applications.

Understanding FastAPI and Docker for ML Deployment

FastAPI is a modern Python web framework gaining traction for its ease of use and focus on developer experience. It offers features like automatic data type validation, automatic documentation generation, and efficient asynchronous handling of requests. These features make it ideal for building APIs that handle data exchange between your ML model and external applications.

On the other hand, Docker is a containerization platform that packages applications with their dependencies into standardized units called containers. These containers isolate applications from the underlying operating system, ensuring consistent behavior regardless of the environment. This isolation is crucial for ML models, as it guarantees they run with the same libraries and configurations used during development.

Building the ML API with FastAPI

Let’s walk through building an API for your ML model using FastAPI. We’ll assume you have a basic understanding of Python and your chosen ML library (e.g., scikit-learn, TensorFlow).

Project Setup

Create a new Python project directory.

Install the required libraries using pip:

pip install fastapi uvicorn <your_ml_library>Replace <your_ml_library> with the specific library you’re using for your ML model.

Defining the ML Model (model.py)

Here, we’ll load the trained model from a file format like pickle or joblib.

import pickle

# Load the trained model

with open("model.pkl", "rb") as f:

model = pickle.load(f)

def predict(data):

# Preprocess data if necessary

# Make predictions using the loaded model

predictions = model.predict(data)

return predictionsThis is a basic example. You might need to add preprocessing steps specific to your model and data format.

Creating the API Endpoints (app.py)

This is where FastAPI shines. We’ll define an API endpoint that receives user input, feeds it to the loaded model for prediction, and returns the results as JSON:

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class UserInput(BaseModel):

# Define the format of the expected user input data

@app.post("/predict")

async def predict(data: UserInput):

predictions = predict(data.dict())

return {"predictions": predictions}This code defines a /predict endpoint that accepts user input in a format specified by the UserInput class. The predict function is called with the received data, and the resulting predictions are returned as JSON.

Running the API Locally

Use uvicorn to start the FastAPI application in development mode:

uvicorn main:app --host 0.0.0.0 --port 8000This command starts the API on your local machine, listening on port 8000. You can now test your API using tools like Postman to send requests and verify the predictions.

Containerizing the ML API with Docker

Now that we have a functional FastAPI application, let’s containerize it using Docker for deployment.

Dockerfile Creation

Create a file named Dockerfile in your project directory. The Dockerfile defines the instructions for building a Docker image that contains your application and its dependencies. Here’s an example:

FROM python:3.9

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

EXPOSE 8000

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]Let’s break down and explain each step:

FROM python:3.9: This line specifies the base image for our container. We’re using the official Python 3.9 image, which provides a clean environment with Python pre-installed.

WORKDIR /app: This sets the working directory within the container to /app. This is where our project files will be copied.

COPY requirements.txt .: This line copies the requirements.txt file, which lists all the Python libraries needed for our application, into the container.

RUN pip install -r requirements.txt: This line instructs the Docker image to install the listed dependencies using pip inside the container.

COPY . .: This copies all files from the current directory (including your project code) into the container’s working directory (/app).

EXPOSE 8000: This line exposes port 8000 within the container. This is the port where our FastAPI application will listen for incoming requests.

CMD [“uvicorn”, “main:app”, “ — host”, “0.0.0.0”, “ — port”, “8000”]: This line defines the default command to run when the container starts. It executes uvicorn with the same arguments used previously to run the API locally, ensuring the application starts upon container launch.

Building the Docker Image

With the Dockerfile in place, use the docker build command to build a Docker image from your project directory:

docker build -t my_ml_api .This command builds an image and tags it with the name my_ml_api. You can replace this name with anything descriptive for your project.

Running the Dockerized ML API

Now that you have a Docker image, you can use docker run to launch a container from that image:

docker run -p 8000:8000 my_ml_apiThis command runs the my_ml_api image and maps the container’s port 8000 to the host machine’s port 8000. This allows you to access the API on your local machine at http://localhost:8000/predict.

Testing the API

With the container running, you can use tools like Postman or curl to send requests to the API endpoint and verify its functionality. Remember, the endpoint is still accessible at /predict, but now it’s running inside the container.

Advantages of This Approach

Deploying ML models with FastAPI and Docker offers several advantages:

· Simplified and maintainable deployment process: Docker handles packaging and isolating your application, streamlining deployment across various environments.

· Increased portability across environments: Docker containers are self-contained, ensuring your model runs consistently regardless of the underlying system.

· Scalability for handling higher workloads: You can easily scale your deployment by running multiple container instances to handle increased traffic.

· Version control and reproducibility: Docker images provide a versioned snapshot of your application, making it easier to reproduce results and roll back to previous versions if needed.

Conclusion

FastAPI and Docker form a powerful combination for deploying ML models efficiently. By leveraging FastAPI’s ease of use and Docker’s containerization capabilities, you can create a streamlined and portable deployment process. This approach empowers you to make your ML models readily available for real-world applications, accelerating innovation and unlocking the potential of your machine-learning projects.

Stackademic 🎓

Thank you for reading until the end. Before you go:

- Please consider clapping and following the writer! 👏

- Follow us X | LinkedIn | YouTube | Discord

- Visit our other platforms: In Plain English | CoFeed | Venture | Cubed

- More content at Stackademic.com